Infrastructure at MoEngage: Growing at a Scale with CASCAD

Reading Time: 4 minutes

Co-authored by – Pulkit Vaishnav and Sudip Maji

When we first started developing various applications at MoEngage, like any other startup, all the services were developed under a unified system. All the configurations were shipped along with the code during release cycles. Slowly we moved some of our applications to microservices. In the initial phases, we used to ship code 3-4 times in a week to keep up with our competition and have an edge. Over time we built several teams and embraced microservice architecture.

From under 100 servers to 3000 servers running at any point in time, our infrastructure has come far. Steadily the configurations kept increasing along with our applications. We also have deployed our services in multiple regions as well. For maintaining configurations, we have used several open source tools such as zookeeper, s3, ansible, chef, etc., We wanted to employ a configuration system that is easy to maintain, understandable for the developers, container friendly and transparent.

The SRE team at MoEngage is responsible for enabling dev teams to ship their code faster and reliably. We are accountable for creating a seamless experience for more than 300 million users we track for our clients; hence, it was imperative for us to ensure any change propagation happens smoothly. This blog will talk about configuration management at MoEngage. This is going to be a three-part blog series where we will share our internal learnings and services built like EasySSH (ssh login management for teams), MongoDB upgrade learnings, Deployments, etc.

Managing Configuration with Centrally Aware Solution to Configure Applications Dynamically (CASCAD)

We were in the lookout configuration management to manage configuration for different processes that run on our systems ranging from Monitoring, Logging, and Application whose configurations changes in between release cycles. It is recommended that for version controlled applications that you ship configuration along with your application package itself.

1. Our Requirements

The configuration management system should do the following things for us and do it well:

- File-based configurations and management of respective services based on change.

- Dynamically manage configurations on remote machines from a central repository/Machine.

- Secure, Lightweight, Scalable and Resilient.

Systems and tools considered:

There are heaps of options for managing configuration tools, but we wanted something that adheres to our requirement to the T. We compared many tools and decided to choose Consul with Conf.d because:

-

- Offers simplicity, low-cost, and future use cases;

- Provides scalability;

- Ease of maintenance;

- Delivers high availability;

- Ease of management of multiple configuration files;

- Supports vault making it easier to manage secrets; and

- Provides service discovery usage.

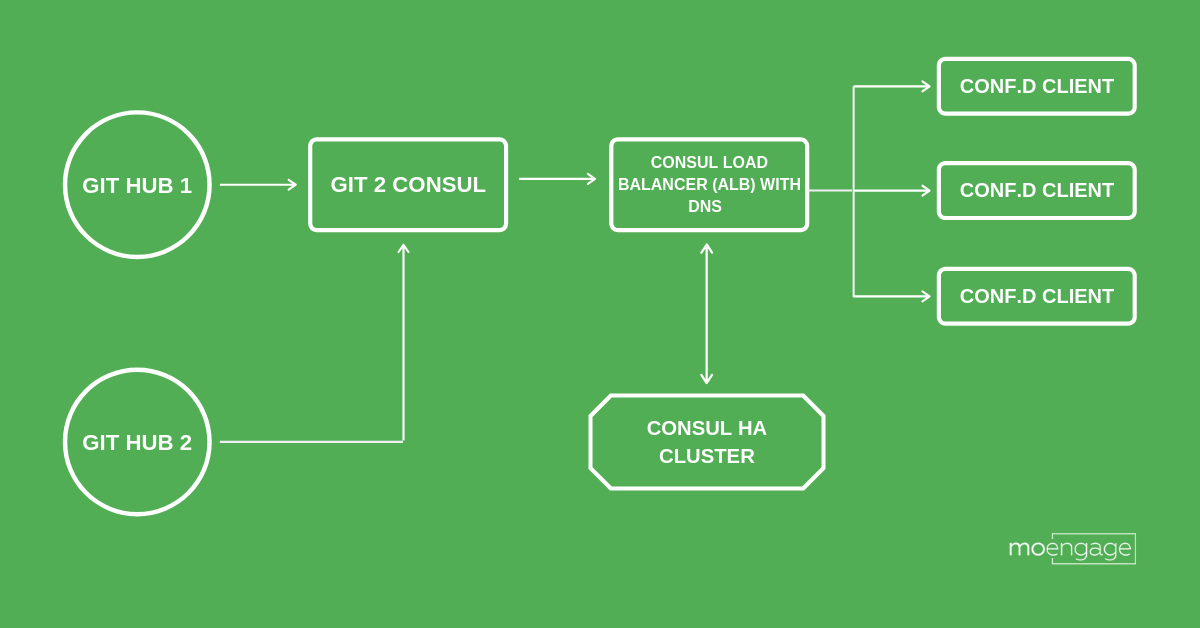

2. High-Level Architecture Diagram

a. Git2Consul

This is a small service which helps track configurations through GIT and update Consul backend on any change (similar to Jenkins GIT plugin without any management). You can read more about it this here. For our use case, i.e. to allow 100s of files in our git repo, we updated the default buffer size from 200KB to 5MB. You may change this based on your use case.

b. Consul

Consul is primarily used for service discovery other than config management. We leveraged the KV store (KV) in the flow. The simple HTTP API which consul offers makes it easy to use. We have employed a load balancer with SSL. Consul also supports multiple data centers out of the box, which also makes it easier to deal with multiple regions. You can read more about it here.

c. Conf.d (GitHub)

We are running conf.d, a lightweight GoLang based configuration management client, on the servers with backend configured to the consul. Conf.d updates local configuration files by frequently polling for changes in the backend for the same file. Templates are leveraged to handle multiple applications/services on configuration change. The traditional way is to employ conf.d with consul as BE. We introduced a directory structure with a wrapper process on top of it to support newer config files.

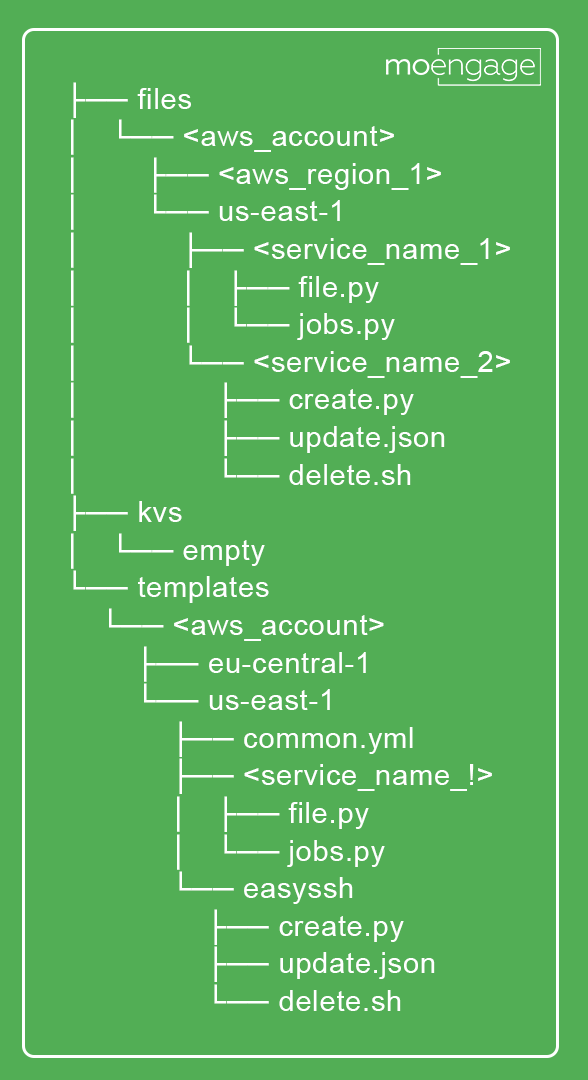

The directory structure looks something like this.

- To ensure support for new configuration files, we have set up a base file to track common.yml.

- common.yml contains all templates which need to be copied to the instance.

- Each line of common.yml is a key in consul which points to a template. You may configure this based on your use case. We are on AWS and went for the following format:

templates/<aws_account_no>/<region>/<service_name>/<configuration_name>.<extension>

- Configurator.py reads common.yml line by line and fetches templates from the consul, fetched templates, in turn, generates the configuration files. This is how we are ensuring that the configurations are dynamic in nature.

How it works

- Each configuration file is managed by a template, this template will look something like this:

[template]src = "generic.tmpl" dest = "/etc/service/awesome_conf.py" mode = "0644" keys = [ "Conf_file.py" ] prefix = "/some/prefix" reload_cmd = "sudo service awesome_service reload" check_cmd = "sudo /usr/bin/awesome_service -t"

- generic.tmpl is responsible for creating configuration file in the destination /etc/service/awesome_conf.py from prefix+keys[0] path. When the conf file gets generated, check_cmd gets invoked if the return code is 0, reload cmd gets executed. Generic.tmpl:

{{range gets “/*“}}{{.Value}}{{end}} - To manage a configuration file we need two files i.e., the file itself and a template file in consul, in order to handle service on configuration changes and execute reload commands.

- Initially when we configure conf.d client on a system, we rely on a base template called common.tmpl, which is responsible for bringing common.yml which will contain template paths for any service running in that system.

- Once common.yml is in place, base template reload_cmd invokes configurator.py, which reads common.yml file line by line and generates intermediate templates in conf.d/templates directory to fetch all the required service template files the system need.

- Now, once consul brings service template files to its template directory, these generate actual configuration files.

Setup requirements and file links:

- Consul service with an endpoint

- Github link for all the files

- Confd client to be installed on the machine. You can run the below files for the same:

- Install.sh

- Configure.sh

The basis of this method, we managed our configuration and grew our infrastructure steadily. Do continue to follow this series as we discuss access control at MoEngage with EasySSH, deployments at MoEngage and monitoring at MoEngage in the next few parts. You can also share this article on social and tag us #TechatMoengage. Please feel free to let us know how you find this insightful article in the comments below.